Many times after you’ve enabled the VCN Flow Logs (or any logs what so ever!) there will be a requirement to get those logs out to some other system. VCN Flow Logs is one, I’ve also configured Cloud Guard logs/alerts in similar way that I will show here.

Key point is to get the logs to OCI Streaming via Service Connector so any system configured to have access on Streaming API and on that Stream can consume them accordingly. You can even consume the logs to Azure or AWS services no problem! OCI Streaming is Kafka compatible service so there won’t be any issues if target system supports Kafka APIs.

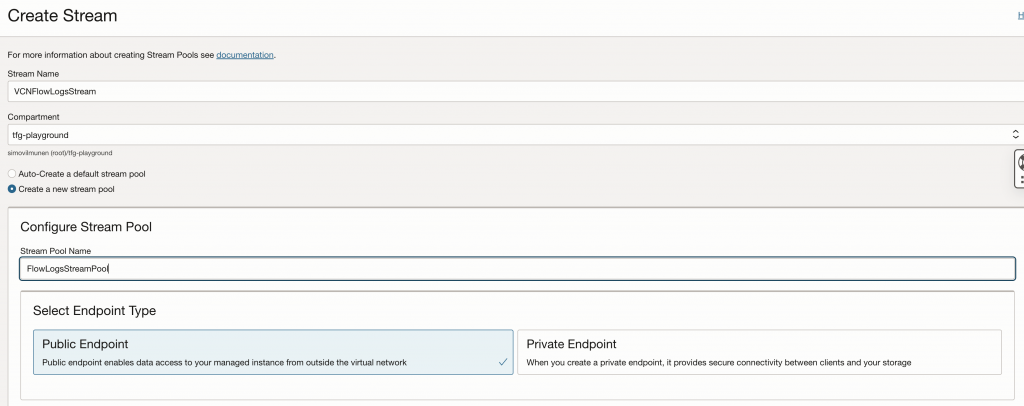

Creating a Stream

Before we can get the logs to OCI Streaming we have to create a Stream and a Stream Pool. You can just navigate to Analytics & AI -> Messaging -> Streaming.

I’ll create Stream and configure new stream pool manually at the same time. If you wouldn’t want a public endpoint you could define a private one. Remember that whoever consumes the stream needs to be authenticated and have correct policies!

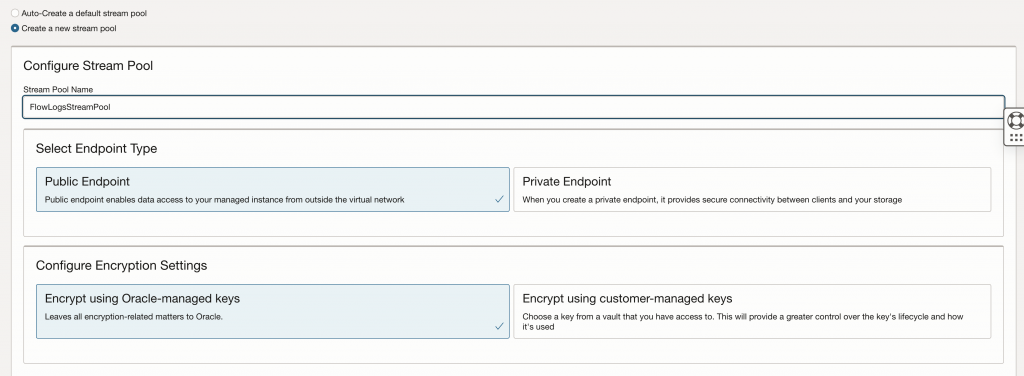

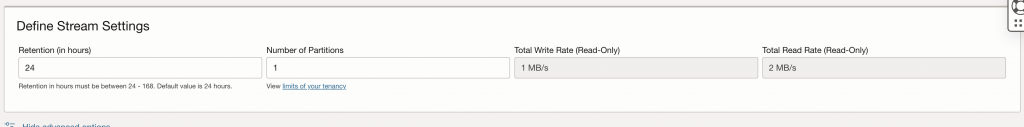

I can use customer-managed keys if I’d like but for now, we’ll use Oracle-managed (default). I can also define stream settings, retention and number of partitions. Depending on amount of partitions I assign, it’ll impact on write and read writes available (1/2 per each partition). It’ll also impact on the cost!

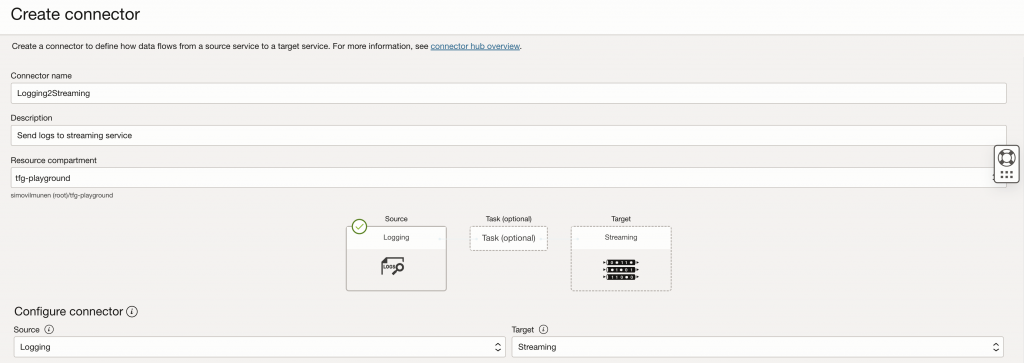

Now that I have Stream created, I can go to Connectors and just create one from the same navigation path. If you’re looking on Logging page, it’ll have Connector option to create there as well which is handy.

I need to configure my source and target. For source it can be any of the following:

- Logging

- Streaming

- Monitoring

- Queue

And for target it can be:

- Functions

- Notifications

- Streaming

- Object Storage

For this my source is Logging and target Streaming. If your target wouldn’t support Streaming, you could always use Object Storage bucket as well and get the logs from there.

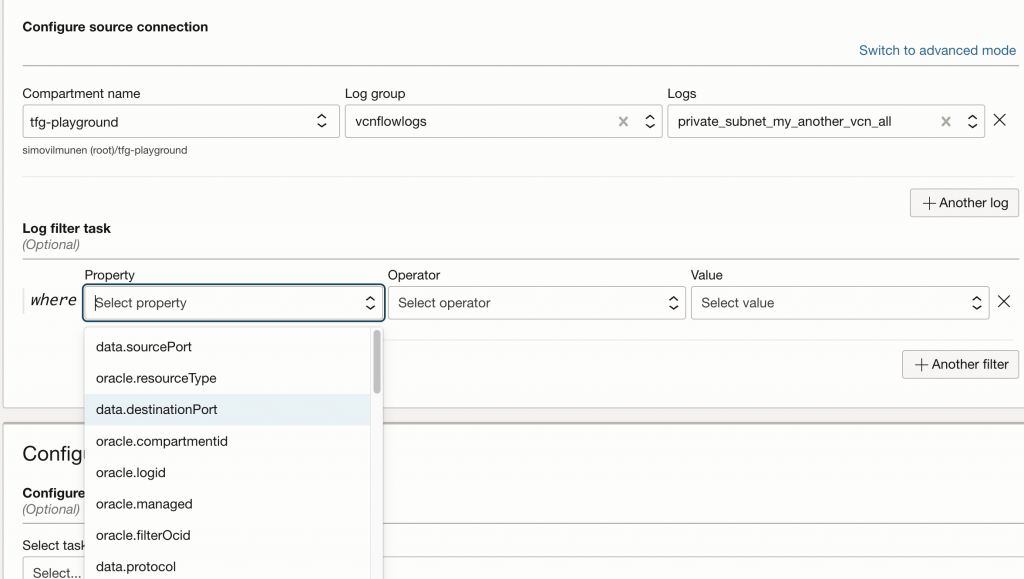

I configure source connection details which is the vcnflowlogs log group I created in the previous post and specify the logs I want to ingest. I could have some optional filtering tasks if I’d like, let’s say I only want data.destinationPort 22 in the stream so could use that as a filter. This time I’ll just ingest all.

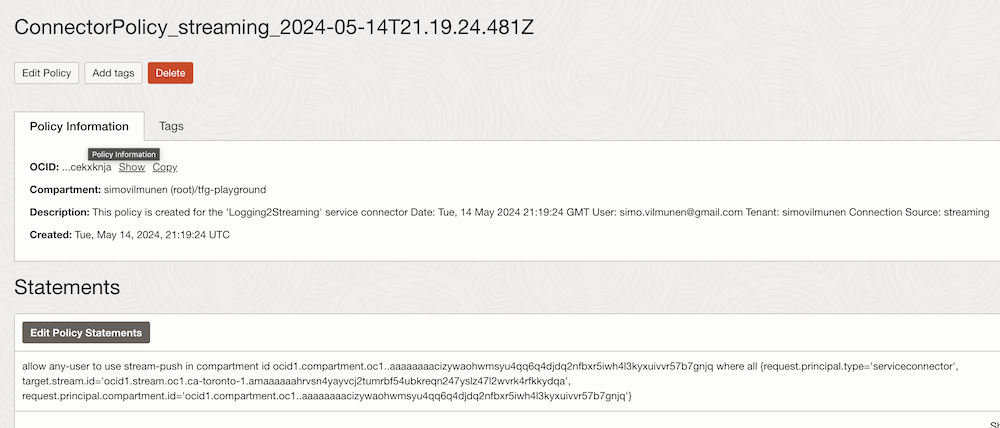

Once I click create, OCI will ask me to create policy for the service connector. I could write my own policy but this time I’ll just create it automatically.

You can see from above screenshot it also defines stream with it’s OCID and request principal is serviceconnector.

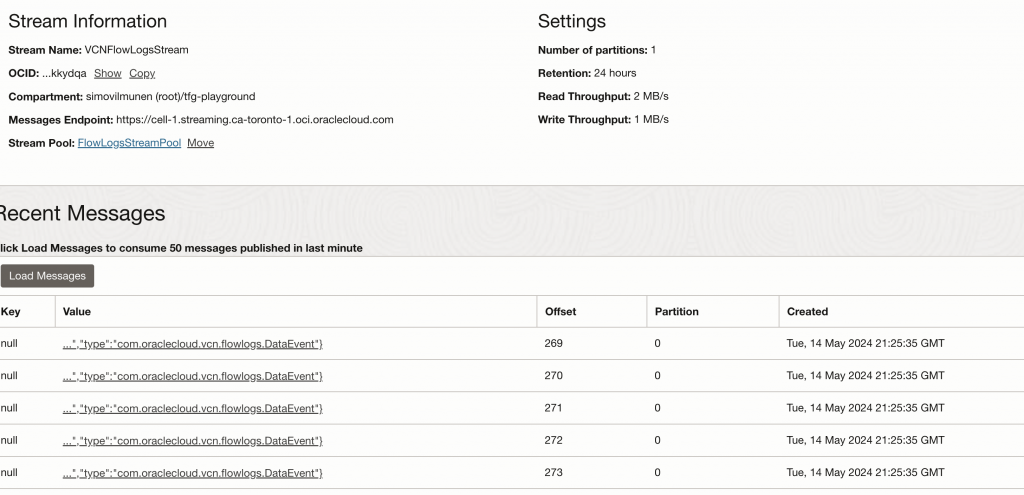

After this I’ll just navigate to my Stream and see if there’s any activity on the stream itself. And I can see logs getting to stream with specific offset and partition.

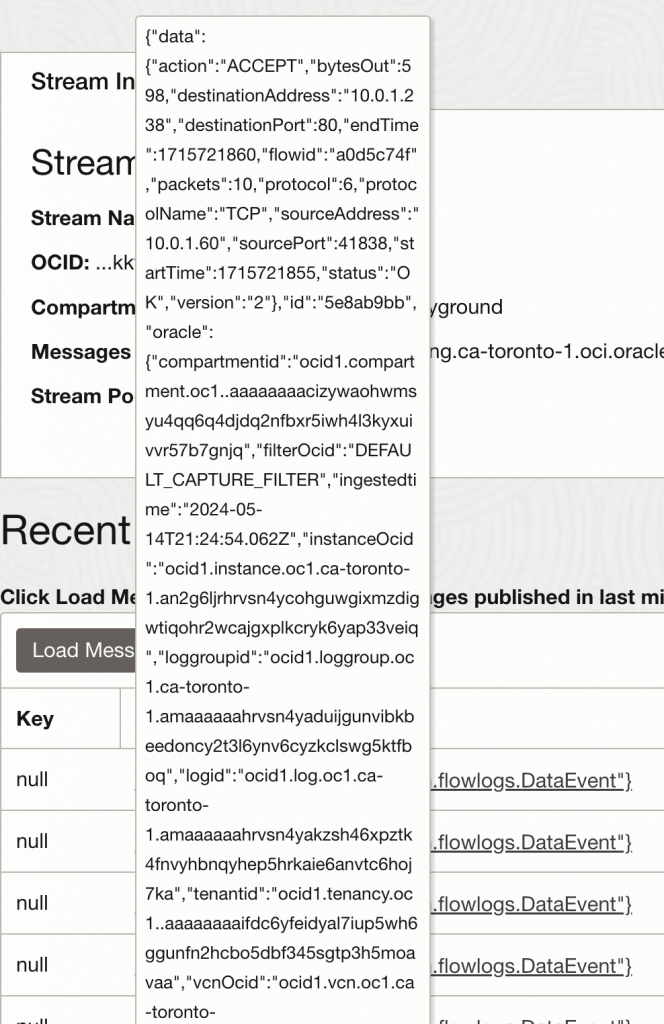

I can just hover over the specific row to see what I would ingest if I would read the stream.

Summary

This just shows how easy it’s to push logs and data into streaming nowadays with OCI Service Connector. If you have requirements to get logs outside of OCI – or even need to do something internally in OCI for specific target, you can consider Service Connector and Streaming.

In the future posts I’ll show how to ingest the data from Stream.